Kafka Event-Driven Architecture Done Right + Real Examples

Kafka Event-Driven Architecture Done Right + Real Examples

Imagine a world where data isn't trapped in different databases anymore. It becomes more like a living, breathing thing as it streams through your applications – instantly triggering actions and guiding decisions in real time. Kafka event-driven architecture gives you the power to jump headfirst into this incredible transformation.

From IoT applications managing billions of devices to data streaming platforms powering the latest AI and machine learning advancements – Kafka has penetrated every corner of the digital landscape. Today, it is used by over 80% of Fortune 100 companies and thousands of others.

If all these big players are reaping the benefits of Kafka, you can too. But “how” is the big question here. Don't worry, we have the answer in this guide.

Learn all about event-driven architecture patterns and see how Kafka empowers their implementation. Let’s dive in!

Event-Driven Architecture Explained: Everything You Need To Know

Event-Driven Architecture (EDA) is a design paradigm in which software components react to events. In EDA, an event represents a significant state change. For example, it could be something that happens in the outside world, like a user clicking on a button, or something that happens inside a system, like a program throwing an error.

Key advantages of EDA include:

- Flexibility: EDA is flexible and innovative as it lets you easily add new microservices to the application.

- Reduces system failure: With EDA, if one service fails, it doesn't cause other services to fail. An event router serves as a buffer that stores events and reduces the risk of system-wide failures.

- Decoupling of producers and consumers: EDA allows services to communicate and operate independently, with neither the producer nor the consumer being aware of the other. This independence helps improve response times and system resiliency.

However, implementing EDA also comes with challenges. You should carefully design it to guarantee that all events are properly routed and processed and need an effective monitoring mechanism to track the flow of events through the system.

Event-Driven Architecture Patterns: Building Scalable & Resilient Systems

Event-driven architecture is a powerful system design approach that can be implemented in different ways. When using Apache Kafka, there are several common patterns that developers often use. Let’s take a look at some of these patterns.

Event Notification

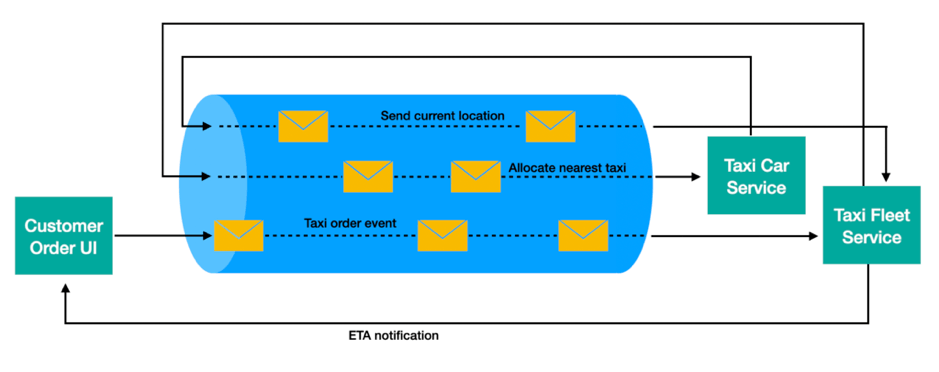

Event Notification is a straightforward pattern where a microservice broadcasts an event to signal a change in its domain.

For instance, when a new user joins, a user account service generates a notification event. Other services can choose to act on this information or ignore it. The events typically contain minimal data which results in a loosely coupled system with less network traffic for messaging.

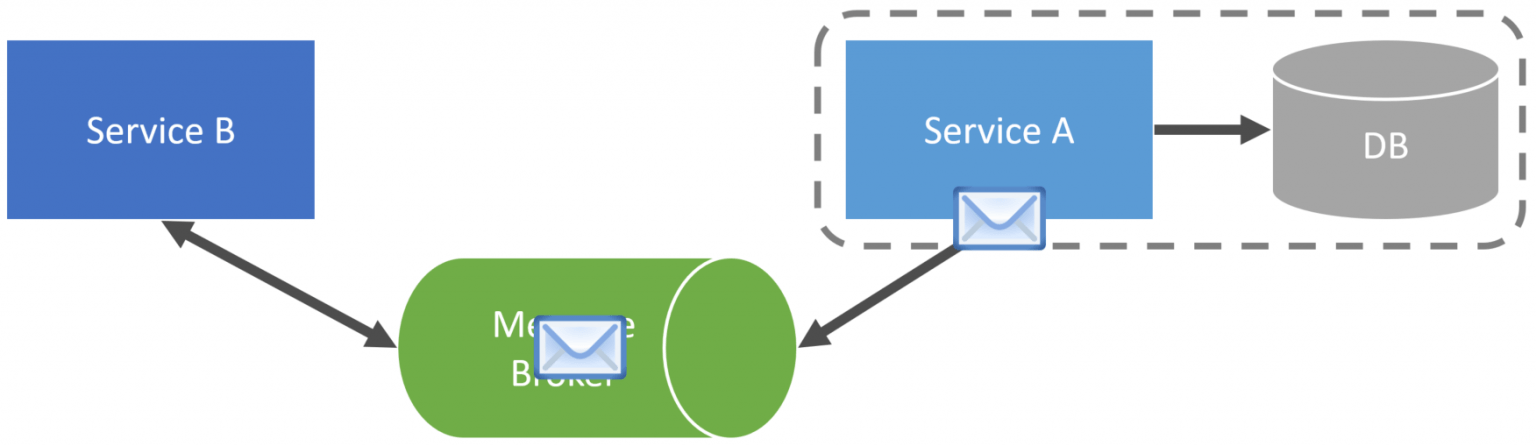

Event Carried State Transfer

This pattern takes event notification a step further. Here, the event not only signals a notification but also carries the requisite data for the recipient to respond to the event.

For example, when a new user joins, the user account service sends an event with a data packet that has the new user's login ID, full name, hashed password, and other relevant information. This design can create more data traffic on the network and potential data duplication in storage, especially in more complex systems.

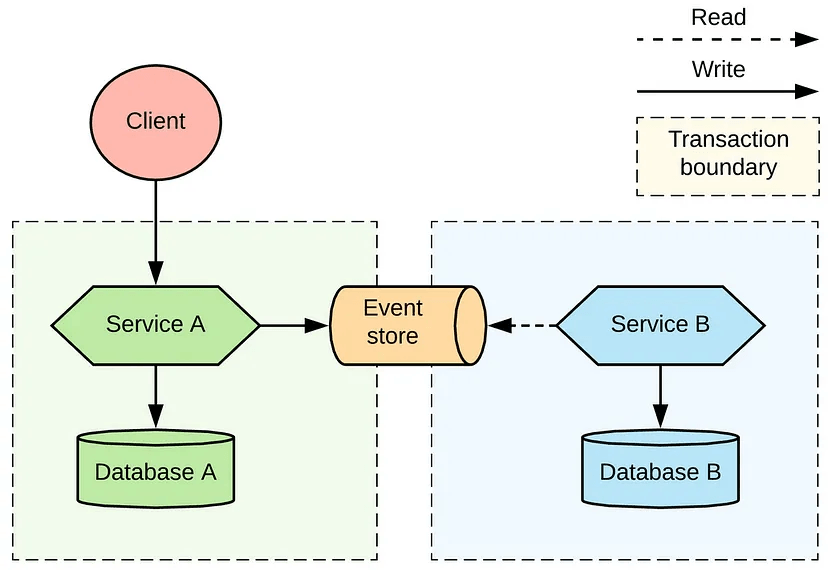

Event Sourcing

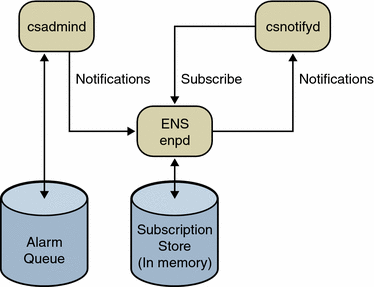

Event sourcing is a more complex pattern that captures every state change in a system as an event. Each event is recorded in chronological order so that the event stream is the primary source of truth for the system.

In theory, you should be able to "replay" a sequence of events to bring back the exact state of a system at any given time. This pattern has a lot of potential but can be challenging to implement correctly, especially when events involve interactions with external systems.

Event-Driven Architectures And Popular Event Streaming Platforms

Apache Kafka

Apache Kafka is one of the many powerful tools available for orchestrating event-driven architectures. However, it's important to keep in mind that there are other services like Pulsar and Gazette, each with distinct advantages to consider.

However, before we dive into the other leading platforms, let’s look at Kafka's key features and components to shed some light on its central role in modern data processing and why it’s being used by tens of thousands of companies.

Kafka routes events in event-driven architectures and creates robust, scalable, and efficient systems for handling real-time data. It is fault-tolerant and durable and handles large amounts of data with ease.

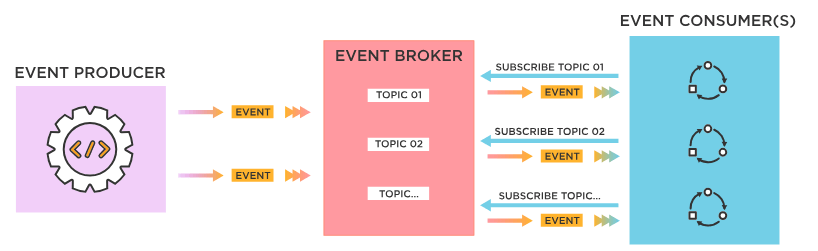

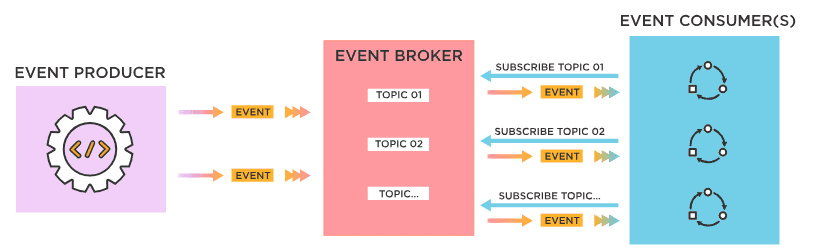

Kafka's architecture is built around the concept of producers, consumers, brokers, and topics.

- Producers are the sources of data. They generate and send events to Kafka. These events can be anything from user activities on a website to sensor readings in an IoT system. Producers send these events to specific topics in Kafka which initiates the event-driven process.

- Consumers are the recipients of the data. They subscribe to one or more topics in Kafka and process the events that the producers sent. In an event-driven architecture, these consumers react to the events and perform actions based on the information contained in the events. Consumers in Kafka are typically grouped into consumer groups for load balancing and failover purposes.

- Brokers are the Kafka servers that store and process events. They receive events from producers, store them in topics, and serve them to consumers. A Kafka cluster is composed of one or more brokers. This lets the system carry out distributed processing and storage of events.

- Topics are the categories or feeds to which producers write and from which consumers read. Each topic in Kafka is split into partitions and each partition can be hosted on a different server. This means that a single topic can be scaled across multiple nodes.

Apache Kafka is usually used with different services.

- Kafka Streams is a client library for building applications and microservices where the input and output data are stored in Kafka clusters. This stream-processing library is used in an event-driven architecture to create real-time applications that respond to streams of events.

- Kafka Connect provides an interface for connecting Kafka with external systems like databases, key-value stores, search indexes, and file systems. In an event-driven architecture, Kafka Connect is used to stream data changes from databases and other systems into Kafka topics, and from Kafka topics into these systems.

- Kafka Zookeeper is a service used by Kafka to manage its clusters of Kafka brokers, synchronize distributed systems, maintain configuration information, and provide group services. It serves as the backbone of Kafka and keeps track of critical aspects like broker details, topic configurations, consumer groups, and access control lists.

Apache Pulsar

Apache Pulsar is another powerful contender, offering a range of unique features and benefits. As with Kafka, it's important to consider Pulsar's capabilities and how it could work as an alternative to Kafka in your data processing pipeline.

Some of the key features and components include:

- Pulsar introduces several novel concepts that set it apart. It employs the concepts of producers, consumers, brokers, namespaces, and topics.

- Similar to Kafka, producers generate events, while consumers receive and process them. Pulsar allows for fine-grained message acknowledgment, providing flexibility in handling message delivery.

- Brokers store and manage the events, ensuring they are accessible to consumers. Topics serve as the conduit through which producers send events and consumers receive them.

- Pulsar introduces namespaces, a higher-level grouping of topics. This feature enables multi-tenancy and facilitates organization-wide management of topics.

Benefits of Pulsar:

Pulsar brings several advantages to the table, which makes it an appealing choice when implementing event-driven architectures.

Multi-tenancy Support: Pulsar's use of namespaces enables efficient isolation of resources among different teams or applications within the same cluster, which enhances security and resource management.

Horizontal Scalability: Pulsar's architecture is designed for seamless horizontal scaling, allowing organizations to expand their event processing capabilities as their needs grow.

Built-in Georeplication: Pulsar provides integrated support for replicating data across multiple geographic locations, ensuring data availability and reliability even in the event of a regional failure.

Gazette

While Apache Kafka and Pulsar are popular choices, Gazette presents a distinctive approach to event-driven architectures, offering its own set of advantages. Let’s explore some of Gazette’s features to get a better understanding of how it serves as an alternative to other event streaming platforms.

Gazette's design revolves around the concept of journals, consumers, brokers, and replicas.

- Brokers: One of the key advantages of Gazette is its use of ephemeral brokers that make scaling a breeze. What’s more, Gazette doesn't require proxying historical reads through brokers. Instead, you can interact with Cloud storage directly, which makes it really efficient for backfills.

- Journals: Journals serve as append-only logs where events are written. They are organized hierarchically and can be segmented to optimize storage and retrieval.

- Consumers: Consumers read events from journals and process them. They can be distributed across multiple nodes for load balancing.

Benefits of Gazette:

Gazette introduces distinctive features that set it apart as a reliable solution for event-driven architectures:

Delegated Storage and Stream Consumption: Gazette enables delegated storage through S3 or other BLOB stores. This allows seamless consumption of journals as real-time streams using any tool proficient in handling files within cloud storage. Gazette also facilitates the structured organization of journal collections, effectively transforming them into partitioned data lakes.

Flexible Data Formats: Gazette allows for the production of records in a variety of formats, such as Protobuf, JSONL, and CSV. Additionally, cloud storage in Gazette retains raw records without imposing any specific file format, streamlining data handling.

Cost Efficiency: Gazette demonstrates noteworthy cost efficiency in comparison to persistent disks, particularly when factoring in replication expenses. This is further bolstered by zone-aware brokers and clients which effectively mitigate cross-zone reads.

Setting Up Kafka For Event-Driven Architectures: A Step-By-Step Guide

Event streaming with Apache Kafka is an effective way to build responsive, scalable applications. It captures events from various systems and processes them in real-time so you can react quickly to them.

To set up an effective event-driven architecture using Kafka, you should carefully plan and configure your Kafka cluster, topics, producers, and consumers. Let’s take a look at all the key steps to set up Kafka correctly for event streaming.

Step 1: Install Kafka

First, install Kafka on your machines. Kafka requires Zookeeper, so make sure you use the latest stable versions of both systems. You can then install it on-premises or use a managed cloud service.

Step 2: Create Topics

Create topics specifically for each event type like user-signup-events, payment-events, etc. Think about the event types you need upfront. Choose partition count and replication factor appropriately.

Step 3: Configure Producers

Next, code your producer applications that will publish events to Kafka topics. Specify the bootstrap servers and appropriate serialization format, and then write for reliability using acks and retries.

Step 4: Configure Consumers

Now write the consumer applications that will subscribe to the topics and process incoming events. Set deserializers and consumer group id correctly. Make sure that your code can handle failures and rebalancing efficiently.

Step 5: Define Streaming Pipelines

For more complex event processing, use the Kafka Streams library to define event streaming pipelines. It lets you transform, enrich, and aggregate events easily.

Step 6: Monitor The Cluster

Continuously monitor the Kafka cluster for throughput, latency, data retention, disk usage, etc. Stay alert for issues and keep an eye on the health of broker nodes.

7 Common Kafka Architecture Design Mistakes & Their Solutions

Kafka comes with potential design pitfalls that may create challenges if you are not careful. Let’s look at some of these common design mistakes and their practical solutions so you can fully benefit from Kafka for building event-driven systems.

Mistake #1: Abandoning databases entirely instead of using them alongside events

In event-driven architectures, logs of event data become the primary source of truth. However, databases continue to be instrumental in their query flexibility. For instance, consider situations where lookups by secondary keys are essential, which requires a database. Data retrieval by attributes like username or email needs indexing that goes beyond partition keys.

Solution: Using databases and event logs

Databases should be considered as a complementary solution to event logs, not as a replacement. They help in materializing different projections of events, offer query flexibility, and handle tasks that involve secondary keys. This way, existing databases in legacy systems can also be integrated with event logs through change data capture pipelines.

Mistake #2: Disregarding the need for schema management

Event-driven systems prioritize capturing event data over static models. However, event schemas, whether implicit or explicit, still hold significant importance. These schemas, which will evolve over time as per business requirements, can impact downstream consumers when altered or extended.

Solution: Emphasizing schema registry and evolution strategies

To make sure that your system can carry out effective schema management:

- Keep a record of event schemas in a central registry that producers and consumers access. This offers a contractual understanding of the event structure.

- Adopt strategies like adding optional fields or requiring new consumers to manage previous schemas when dealing with schema changes. You can also use tools like Estuary Flow that offer automatic schema inference.

Mistake #3: Over-customizing event consumers

Writing event data is relatively straightforward but consuming and processing events is complex. Building custom frameworks to manage aspects like aggregating events, joining events from different streams, or managing local states can sidetrack focus from core product development.

Solution: Leveraging existing streaming frameworks

Frameworks like Kafka Streams can manage intricate event-processing tasks efficiently. They handle the partitioning of events, state redistribution during scaling, and assure fault tolerance and rebalancing. This prevents operational complexities from infiltrating the application layer, which lets services focus on delivering direct value through business logic.

Mistake #4: Overuse of event sourcing

Event sourcing is a popular pattern where changes to an application state are stored as a series of events. While it can be advantageous, it's not always the best fit for every situation. Excessive use causes complexity, performance degradation, and increased risk of data inconsistency.

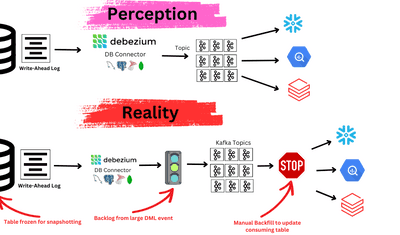

Solution: Combining CRUD & Change Data Capture (CDC)

To strike a balance, combine simple CRUD operations with CDC. This way, the majority of use cases can be solved through simple database reads and CDC can be used to generate materialized views when complex queries arise.

Mistake #5: Publishing large payload events

Publishing large payload events creates latency, reduces throughput, and increases memory pressure. These systems are more costly to run and scaling them is difficult since the requirements can grow exponentially.

Solutions:

You can use different strategies to address this issue of high-volume data:

- When dealing with large payloads, split the messages into chunks to reduce the pressure on your Kafka brokers.

- Compressing payloads mitigates the impact on broker performance and latency. Choose a suitable compression type that works best with your payload to get the most benefits.

- For very large payloads, store the payload in an object store, like S3, and pass a reference to the object in the event payload. This approach lets you handle any payload size without impacting latency.

Mistake #6: Not handling duplicate events

Most event streaming platforms, including Kafka, guarantee at-least-once delivery which can result in duplicate events. This can have unwanted side effects like incorrect data updates or multiple calls to third-party APIs.

Solution: Using platforms offering exactly-once semantics

Data streaming platforms like Estuary Flow offer exactly-once semantics. This guarantees transactional consistency for precise worldviews as opposed to merely consistent at-least-once semantics.

Mistake #7: Not planning properly for scalability needs

Scalability is a major aspect to consider in event-driven architectures. One significant advantage of event-driven systems is the independent scalability of compute and storage. However, proactive planning for scaling is important.

When it comes to compute, scaling stateful stream processors can be challenging. As the system grows, rebalancing processor instances gets trickier. For storage, the naive approach of adding more storage nodes works but it can become expensive.

Solution: Prioritizing scalability planning in advance

Consider the scalability of both compute and storage elements at the early stages of system design:

For compute, use hosted services like Kafka Streaming to manage the scaling of the streaming cluster.

- For storage, adopt a tiered strategy, with less frequently accessed data moved to cheaper storage and recent data kept in fast storage.

2 Real-World Examples Of Kafka Event-Driven Architecture

Let's take a look at 2 case studies where companies used Kafka event streaming to drive digital transformation and gain a competitive advantage.

Example 1: AO's Transition To Online Retail

AO, a top UK electrical retailer, faced a big problem when the COVID-19 pandemic caused a huge increase in online shopping. This sudden rise in demand meant AO had to quickly grow and adjust to handle the high number of orders.

The Solution

AO implemented a real-time event streaming platform powered by Apache Kafka. This unified historical data with real-time clickstream events and digital signals to let AO's data science teams deliver hyper-personalized customer experiences.

Kafka Streams was pivotal in reducing latency and improving personalization. The platform also integrated different data sources like SQL Server and MongoDB, expanding AO's cloud footprint across Fargate, Lambda, and S3.

The Benefits

The real-time platform delivered tremendous value:

- Accelerated conversion Rates: Personalization drove a 30% increase in conversion rates.

- Real-Time inventory: Integrating stock data into customer journeys improved product offerings and visibility.

- Faster innovation: Decoupled data access via events allowed teams to rapidly build new capabilities and use cases.

- Lean development: With a unified platform, developers focused on creating differentiating features rather than wrestling with infrastructure.

- Enhanced personalization: Unified customer profiles helped AO tailor the payment service, product suggestions, and promotions which improved customer experiences.

Example 2: 10x Banking Modernization

Traditional banks face immense pressure to go digital because of the fast-growing fintech companies that are changing the financial industry. But limited by outdated systems, they struggle to keep up. This challenge spurred 10x Banking to reimagine the core banking infrastructure it offered.

The Solution

10x Banking built SuperCore, a cloud-native core banking platform delivered "as-a-service". Powered by Kafka-based real-time data streaming, SuperCore equipped banks to compete digitally.

Kafka provides these key capabilities to the new system:

- Agile delivery of hyper-personalized customer experiences.

- An event-driven architecture to power SuperCore's core services.

- Streaming data ingestion at a massive scale to process business events in real time.

The Benefits

With SuperCore, the bank can:

- Launch new products and services faster

- Deliver personalized recommendations and insights

- Empower developers to innovate rapidly on a cloud-native platform

- Reduce total cost of ownership by modernizing legacy infrastructure

Estuary Flow: A Fully Managed Alternative And Data Lake For Apache Kafka

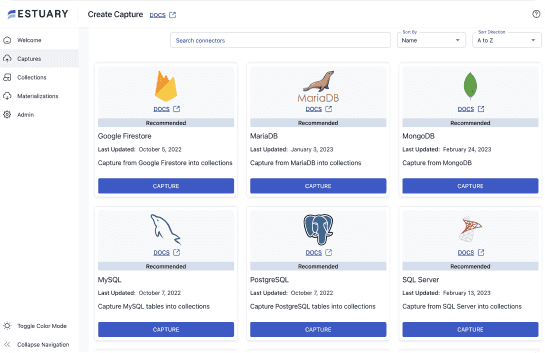

Built on Gazette, Estuary Flow is our DataOps platform that not only stands out as a compelling alternative to Apache Kafka but also serves as a powerful complement for implementing event-driven architectures.

Estuary Flow's strong point is its automation, which gets rid of the complex infrastructure management often linked to Kafka. Flow’s engine mirrors Kafka's power, but it offers a user-friendly, fully-managed solution that frees your teams from demanding data engineering tasks.

By also serving as a data lake that can seamlessly interface with Kafka, Flow enhances the overall capabilities of the architecture, making it a powerful combination for organizations dealing with large-scale data processing and analytics requirements.

While Kafka can be used as a data lake, it brings added operational workload and storage costs. Instead, Estuary offers an integrated real-time data lake with support for backfilling historical data. This allows for high-throughput, low-latency real-time pipelines without the need for manually building your own setup.

Key features of Estuary Flow are:

- Robust architecture: It is built for resiliency with exactly-once semantics for consistency.

- Scalability and reliability: It provides a robust, scalable platform to handle demanding data pipelines.

- Data monitoring and troubleshooting: Flow gives visibility into pipeline health for early issue detection.

- Extensive data connectors: It offers built-in connectors to simplify integrating data systems into pipelines.

- Streamlined infrastructure management: Flow automates complex infrastructure setup and management.

- Real-time database integration: It enables loading database data into real-time dashboards and ML models.

- Transformative data processing: Flow lets you transform data in flight through cleansing, deduplication, formatting, and more.

- Data Lake Functionality: Estuary Flow serves as an inbuilt data lake, providing a centralized storage solution for raw, structured, semi-structured, and unstructured data from various sources.

- Historical Data Backfilling: Estuary supports the integration of historical data, allowing for a comprehensive view for analysis and processing.

Conclusion

When done right, Kafka event-driven architecture turns businesses into super agile, data-driven machines. It's not just about meeting today's demands; it's all about future-proofing your applications too. It can effectively manage spikes in user activity, making data processing a breeze.

Estuary provides a fully-managed alternative to Kafka that automates infrastructure complexities through its advanced cloud platform. With intuitive dashboards and extensive built-in connectors, Flow makes event streaming accessible to all users.

If you are looking for an alternative in implementing event-driven architecture or addressing the challenges often associated with open-source Kafka, Estuary Flow stands out as an excellent pick. Sign up for a free Estuary Flow account today or contact our team and see the magic of event-driven architecture done right firsthand.