Real-Time Data Streaming Architecture: Benefits, Challenges, and Impact

Real-time data streaming architecture is a potent tool for teams in a range of industries… if you have the right skills and infrastructure.

We’ve all seen the importance of data continue to grow in the past decade or so — and for good reason. Data gives market insight and observability, and it drives many business operations from logistics to customer service. It has become one of the most valuable commodities in any industry.

To keep up with the growing demand for data, a new data processing and transmitting mechanism had to be invented.

In recent years, real-time data streaming has been adopted across sectors. It allows us to process and send data in real time.

With this technology, business analysts can receive the data they need to analyze markets in real time — and that’s just one example. Across your organization, real-time data streaming technology gives you fresh data which gives you accurate insights.

In this article, you’ll learn what real-time data streaming architecture is, its benefits, limits, and use cases across various industries.

What is Real-Time Data Streaming Architecture?

Real-time data streaming architecture comprises two technologies: data streaming and real-time processing.

- Data streaming involves a continuous process of sending and receiving data.

- Real-time processing ensures that data is processed in a concise period of time (usually in milliseconds).

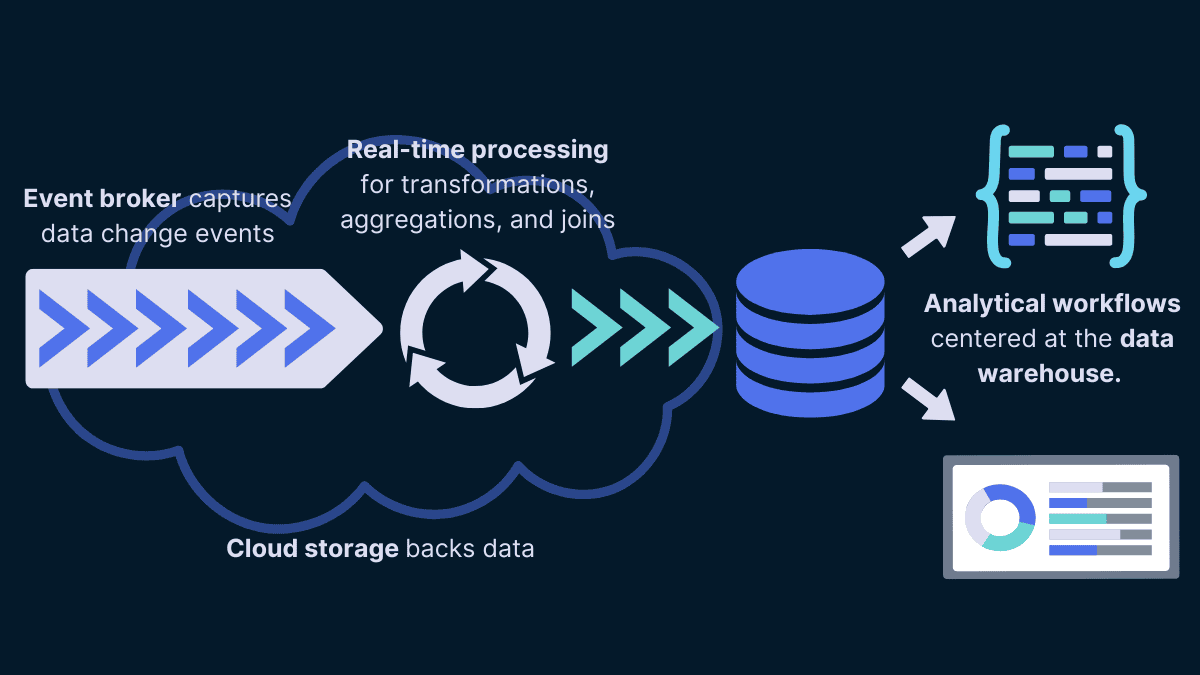

Real-time streaming architecture handles data in terms of change events. That is, when something changes at the data source, that change is ingested and moved downstream for processing immediately. You can accomplish this with an event broker, such as Kafka or Gazette.

What exactly happens after the broker captures a change event? That depends on your specific architecture, goals, and the data itself. There may be transformation, aggregation, or validation steps. The data is then sent to a downstream system (sometimes called the consumer) for immediate analytics or to trigger further action.

Finally, the event is often used to update stored data in a data warehouse or similar, so that the change to the data is recorded for future use.

Real-time data streaming architecture works fast and constantly processes small packets of data. When you build this kind of architecture from scratch, there is a high chance of data being incomplete or low-quality. It takes a lot of engineering to maintain.

An alternative to data streaming is the batch processing architecture which receives large amounts of data from different sources and processes it in a set interval of hours or days. This architecture does give complete data, but it doesn’t give fast results or output when compared to the real-time data streaming architecture.

Below are the mechanisms and components that the data streaming architecture uses:

- Data storage: A data lake or warehouse is used to temporarily store the collected data as it is being processed. Usually, data streaming architecture storage doesn’t cost much because the architecture processes the data immediately after it has been collected from the source and then sends it to the consumer. Examples of platforms offering data storage services are Azure Data Lake Store and Google Cloud Storage.

- Message Brokers: The message broker, or event broker, is the main technological component that makes data streaming possible. It collects and transports data events. You can use platforms such as Apache Kafka and Gazette.

- Data processing: Data transformation involves aggregating, validating, mapping, and joining data. Data has to be transformed or processed in real-time before being used for analytics or operational workloads. Data tools such as Apache Spark and Apache Storm can be used for processing data.

- Data analytics tools are needed to analyze the processed data. Data can only provide valuable insights after it has been sorted and analyzed. You’ll usually use a variety of BI tools or analytics platforms like dbt. Most of these tools will integrate with your data warehouse.

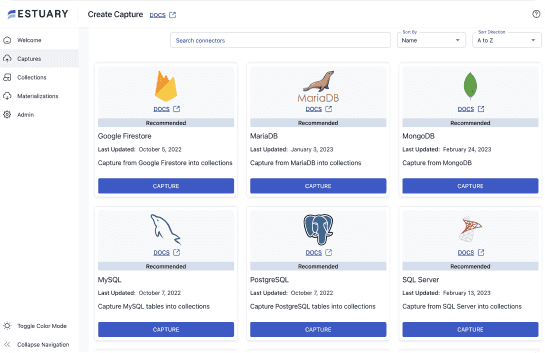

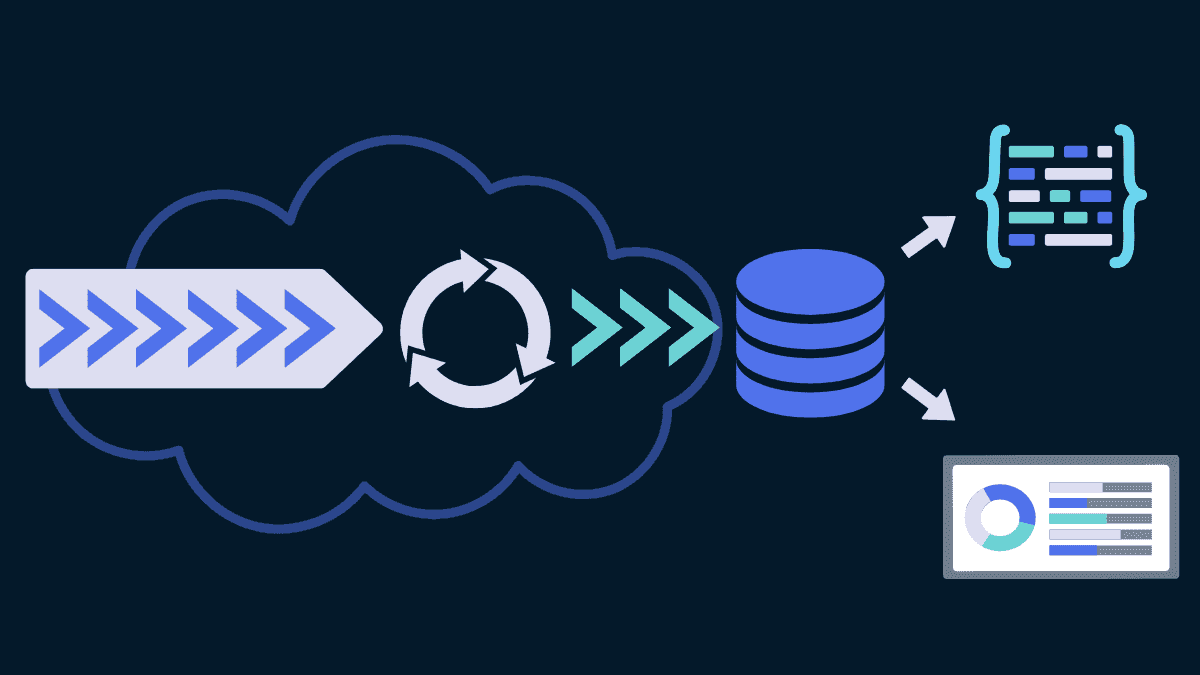

A simplified example of a real-time data streaming architecture powering real-time analytics.

Real-Time Data Streaming Architecture Use Cases

Data streaming is used in situations where data is used quickly after arriving from the source. For example, weather information is immediately processed in real time after being fetched from weather instruments to detect any potential storms, and then it is sent to the weather application to make quick updates. Data streaming has low latency, thus enabling you to spot problems and be alert before disaster strikes.

Here are different use cases of real-time data streaming architecture:

Detecting Financial Fraud

For detecting fraud, real-time data streaming architecture is quite helpful. Financial institutions can spot suspicious activity as it happens and take fast action to stop fraud with the use of real-time data streaming. Large volumes of data, including transaction history, user behavior, and external data sources, can be analyzed by the system to quickly spot anomalies.

For instance, the system may promptly identify any unexpected increases in the size of a customer’s transactions made in a foreign nation.

Healthcare Surveillance

Real-time data streaming is suitable for medical facilities such as hospitals, where health data and metrics are important for knowing the status of the patient.

Healthcare monitoring benefits from real-time data streaming architecture. It can continuously check patients’ vital signs and notify medical staff of any abrupt changes.

Medical practitioners can use this to their advantage to make quick decisions and take action to avoid any health issues. Patients’ medication intake can be tracked in real-time using streaming architecture, ensuring that they take the right dosage at the right time.

Customization in E-Commerce

The architecture of real-time data streaming can be applied to personalize e-commerce. Businesses can tailor product recommendations and promotions to specific customers by evaluating customer data in real time.

Metrics for social media

In social media analytics, real-time data streaming architecture is also helpful.

It offers insights into customer sentiment, trends, and opinions via real-time analyzing social media data streams. This enables companies to immediately respond to client input and modify their marketing plans as necessary.

The Benefits of Using Real-Time Data Streaming Architecture

Here is a list of benefits you will enjoy when you use real-time data streaming architecture:

More Rapid and Effective Decision-making

Faster decision-making is one of the main advantages of real-time data streaming architecture. Organizations can swiftly make educated decisions by analyzing data in real time with real-time data streaming. This is particularly crucial in fields like banking, where split-second choices can have a significant impact on investment decisions.

Customer Personalization

Personalized customer experiences can also be achieved with real-time data streaming infrastructure. Organizations can provide individualized advice and promotions to customers by evaluating consumer data in real time, thereby boosting customer happiness and loyalty.

Enhanced Security

The security of real-time data streaming architecture can also be increased. Organizations can spot possible security issues and take fast action to prevent them by continuously monitoring data streams. This is crucial in sectors like banking where security concerns can have serious repercussions.

The Limitations of Using Real-Time Data Streaming Architecture

Data streaming architecture is complex and challenging since you will be handling many components. Data streaming architectures also release many logs which makes it hard to find a problem since the logs will be unending.

Below is a list of limitations that can inhibit the growth of real-time data streaming architecture.

Complexity

The architecture of real-time data streaming is complicated and calls for particular knowledge and abilities.

Previously, we got to know various tools used in real-time data architecture such as Apache Kafka.

These are sophisticated tools and you have to use more than one of them to fully implement the real-time data streaming architecture. Thus, you need more knowledge, skill, and time to be successful in implementing this architecture compared to a batch processing architecture.

Data Quality

The architecture for real-time data streaming significantly depends on the accuracy of the data. Companies must guarantee the reliability and accuracy of the data sources, and the real-time architecture must include backup measures in case of outages or errors.

Scalability

Scaling real-time data streaming infrastructure can be difficult. When the volume of data grows, the system can find it difficult to keep up, which could result in slower processing and potential delays.

To be successful, you need a real-time data architecture that’s designed to scale from the ground up.

Conclusion

Using data streaming architecture makes your team competitive as you will be able to make decisions faster with quality data. You need a consistent stream of data for you and your team to always be ahead of your competitors.

But the engineering implications of building a data streaming architecture shouldn’t be taken lightly.

Real-time data streaming architecture has advantages and disadvantages that organizations should carefully weigh before using it. Real-time data streaming architecture can be a potent tool for enterprises in a range of industries with the correct team and infrastructure.

If you’d like to go more in-depth with an engineering example, you can check out the source code on GitHub for Estuary Flow, a real-time data pipeline platform that includes many of the components of a real-time data streaming architecture and avoids many common pitfalls. For even more insight on how Flow was built, you can talk directly with the engineering team on Slack.