Why you don’t actually need a reverse ETL platform

If you set up your architecture right, you just need one, robust data pipeline system.

(You just need one, robust data pipeline system.)

If you’re familiar with data pipeline products, odds are you’ve run into some that market themselves as reverse ETL platforms. Others may not embrace the label, but acknowledge that they support this capability.

So, what’s the deal with reverse ETL? Does it even need to be a thing? And how can you implement reverse ETL in your data stack?

In this post, I’ll argue that the answers to these three questions are:

It’s simply a capability that any good pipeline system should already include.

No, if you have a well-architected and integrated stack.

And,

Focus on robust, flexible data pipelines that can capture and operationalize data, and don’t worry about what’s forwards and what’s “reverse.”

As always, to set the stage (and, ahem, to appease the almighty algorithm), let’s start with some definitions.

What is reverse ETL?

Before we can dive into reverse ETL, we have to talk about the original (forwards) version, as well as the closely related concept of ELT.

The simplest definition — and one that we’ll return to — is that ELT, ETL, and reverse ETL are ultimately all data pipelines. Their job is to move data from system A to system B, and apply transformations along the way. “E” is for “extract,” “T” is for “transform,” and “L” is for “load.”

The concepts of ETL and ELT are both biased towards the ingestion aspect of a pipeline. That is, the part of the data lifecycle in which data is pulled from an external location into an organization’s unified source of truth. Often, this is a data warehouse or data lake.

The difference between ETL and ELT is hazy at best — see my earlier post to learn more. But a quick rule of thumb is that ELT is generally seen as the more modern framework. Its goal is to get data into the data warehouse or lake relatively unchanged. From there it can be analyzed and manipulated however you’d like.

Many analysis tools work directly on data in the warehouse — think dbt, and BI tools like Superset or Looker. But eventually, data will need to be operationalized. It must be piped out to where it is needed — in the appropriate format — and put to work.

This is the selling point you’ll commonly hear for reverse ETL.

Reverse ETL has been defined as “the process of moving data from a data warehouse into 3rd party systems to make data operational.”

The importance of operationalizing data

Data operationalization is the process of delivering accurate, timely data to systems where it can be used to drive action.

This is a critical part of the data lifecycle, and it requires output from a data storage system.

Examples of operational data are endless. Here are just a few:

- Using customer action data to expedite a tech support experience

- Identifying at-risk customers or sales leads while there’s still time to prevent losing them

- Tailoring hyper-personal marketing based on interactions with an online store

- Monitoring product and platform usage both to ensure the health of the application, and to provide personal messaging and metrics for users

You’ll notice two things about these use cases.

The first is that they require fresh data relatively quickly.

The second is they are mostly driven by people whose line of work is not strictly data-related. Data people like to hand-wave-ily lump all these folks into the broad category of “business people.” More specifically, they do jobs like marketing, sales, customer service, customer advocacy, and management.

These are professionals with a huge spectrum of technical skill levels, and they need more than just access to operationalized data. They also need to have a significant degree of control over it, because, of course, circumstances change constantly.

This means that it’s particularly important for data operationalization tools to be broadly usable and UI-forward.

We’ll circle back to this point later.

How the batch data paradigm created reverse ETL

So, we’ve now established that we need to get data out of storage and operationalize it. This is pretty uncontroversial.

But why do we need a whole unique category of tool to do this? How is this different from any other type of data pipeline?

The short answer: it’s not. Reverse ETL is simply a model that arose from a batch-centric paradigm and the concept of the data warehouse as the single source of truth.

The batch data paradigm

Batch data processing means performing an action on data, such as ingesting it or transforming it, at a given time interval. Because this doesn’t mirror how events happen in the real world, this type of processing introduces a lag into the data pipeline — new events aren’t reflected until the next batch run.

Contrast this to real-time processing, or event streaming, where each new piece of data that is picked up triggers an event, which is streamed through the data pipeline continuously. For more background, you can check out my post on batch vs real-time data processing.

Batch processing has long been accepted as an easier method to implement. In general, companies use batch processing to build pipelines in-house. There’s recently been widespread adoption of out-of-the-box ELT providers (Fivetran, for example). These are much easier to use, but still rely on batch processing.

Data warehouses as the single source of truth

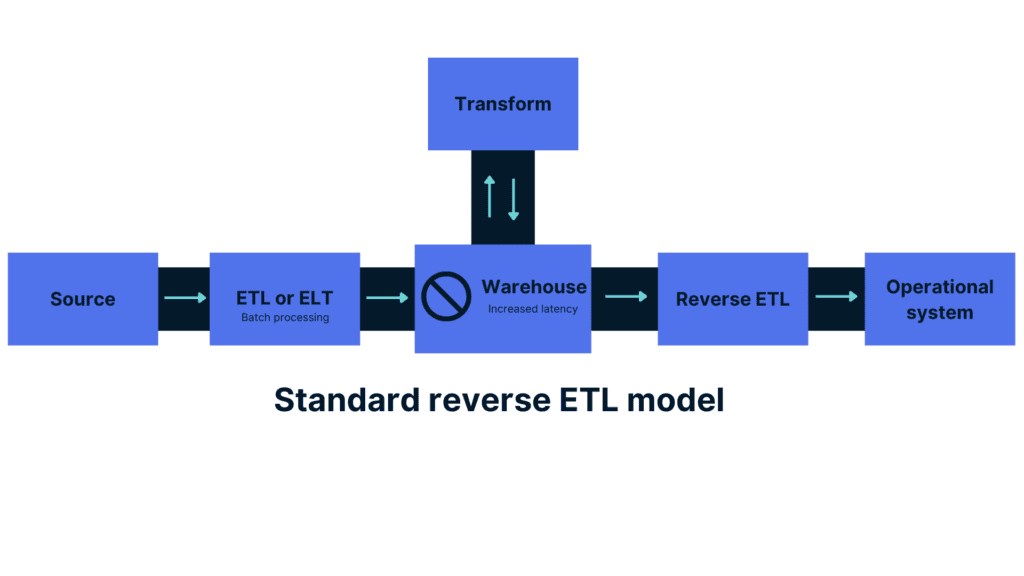

A pattern you’ll sometimes see in data architecture today — and the pattern that reverse ETL assumes you follow — has the data warehouse as the central source of truth. There’s a strong prioritization of ingesting data from other systems into the warehouse for analysis.

This is problematic on multiple levels.

The first is how, when combined with the batch ingestion methods we just discussed, the warehouse becomes a breeding ground for stale, stagnant data. With these types of pipelines, data is not flowing into the warehouse; it lands there, and when it does, it’s already somewhat out of date.

Now, this may be acceptable for exploratory analysis and transformations. That is, after all, what data warehouses are designed to support.

Where this really becomes a problem is when you also try to operationalize data from the data warehouse.

Data warehouses are very different from operational databases. You can read more about the difference here, but ultimately, when you want fresh data that a human or system can quickly act on, you should be getting that data from an operational database, which are designed for this purpose.

If you stick a batch pipeline and a data warehouse between the operational database and the destination system, you’ve already set yourself up for failure.

It doesn’t matter how good the reverse ETL platform is at getting the data back out of the warehouse, or even if it’s a real-time pipeline, because the data in the warehouse is already outdated.

Of course, there’s plenty of variety in architectures and systems of records. Regardless, the latency that comes from the batch pipeline’s termination can’t be un-done downstream.

“Just another data pipeline”

Some companies that build pipeline platforms lean into the label of “reverse ETL;” others are leery of it. This makes sense because, as we’ve seen, the conversation around reverse ETL tends to veer into the territory of poor architectural patterns.

The CEO of data pipeline platform Rudderstack, Soumyadeb Mitra, wrote a post with some great points on the technicalities involved in reverse ETL.

A couple of takeaways to highlight from his article are:

- A good pipeline platform should be able to get data into and out of storage and to various external systems, so there’s really no need for a distinction.

- Real-time pipelines (or event streaming) are important at all stages of the data lifecycle. The division of real-time and batch is where a lot of this starts to break down.

I’d like to expound on that second point a bit more.

Unified, real-time data pipelines make reverse ETL unnecessary

Let’s say you’re a company with an online store, and you’re performing analytics with the goal to improve your sales and marketing efforts. You have customer data in several SaaS tools, and you’re easily able to pipe this data into your warehouse with an ELT tool.

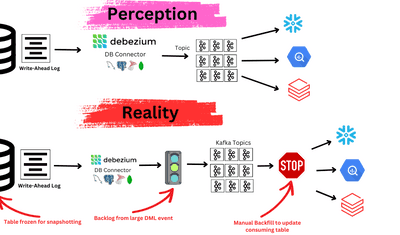

You also, critically, have the event logs from customer interactions with your online store. You want these events to reach the warehouse in real time, so you’ve gone to the trouble to set up an event-based pipeline with Kafka.

With some manipulation, you can explore and analyze all this data together in your warehouse, and model some data products that are ready to be operationalized.

Now what?

You have a low-latency data stream thanks to Kafka, which is awesome, but it terminates in the warehouse. Your data in motion has become data at rest, bottlenecked by the batch data pipeline and the warehouse. Now, your data warehouse is your best source of truth. The path of least resistance is to purchase a reverse ETL product and call it a day.

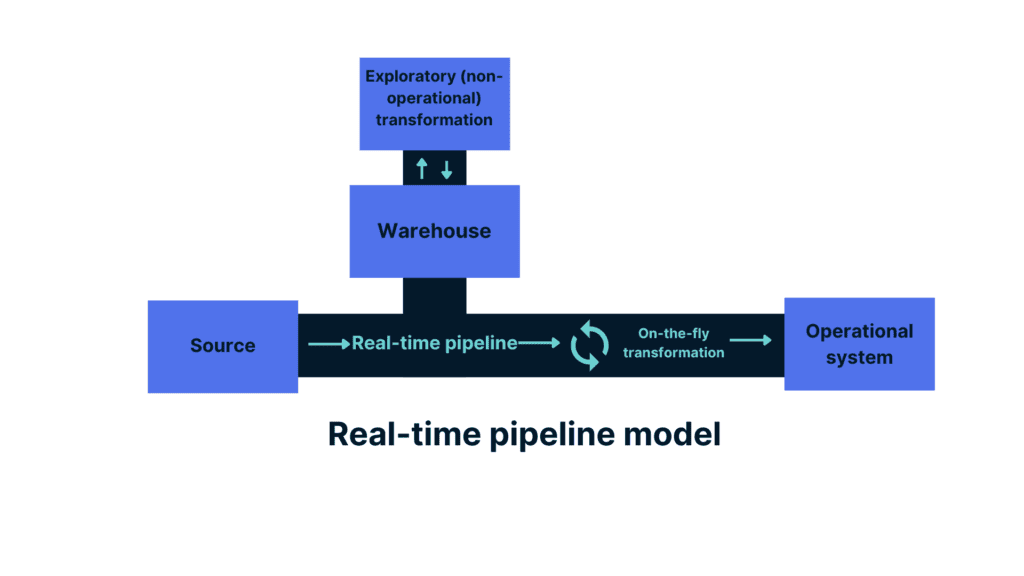

But there’s a better way: unify your data systems with a pipeline platform that is event-based first, but can also handle batch data. Pipe the data to the warehouse for exploratory analysis, of course, but operationalize it directly from the source, and apply any transformations you need on the fly. The data that gets operationalized is the same data that you analyze, but it doesn’t have to pass through the warehouse.

This is possible because, as Mitra points out, you can model tabular data (what you’d typically batch) as an event stream, and later re-build the table. But the inverse is not true.

Now, we arrive at the hard part. In today’s landscape, creating this type of event-based data integration is a huge technical barrier.

Real-time SQL platforms like Materialize can provide an approachable method for real-time data analysis, although this effectively adds a new type of data storage, and can still require additional work to fully integrate across your stack.

Kafka, as the most popular event bus and an unopinionated framework, could be a solution — for some concrete examples, check out Kai Waehner’s post on how reverse ETL can be an anti-pattern.

But Kafka is hard, and using it successfully often requires you to integrate multiple third-party connectors and services.

And, as we mentioned earlier, data operationalization needs to be accessible and transparent, or you can disempower the “business people” whose job functions require a foundation of reliable data.

Ideally, what you want is an event-driven, real-time pipeline framework that offers engineering power and also offers an intuitive, UI-forward application that business people can leverage.

Better options for reverse ETL

You may have read that last paragraph and thought: Ok, that’s nice, but does it exist?

The trend of “reverse ETL” is an interesting one, because it can both encourage you to slap a bandaid over bad architecture, or it can be a term that companies adopt for marketing when their real mission is to improve data integration overall.

In short, these pipelines are absolutely possible, and they are starting to appear. For the time being, the work of any potential customer is to read beyond the marketing terms companies use, and see what architectures they actually enable.

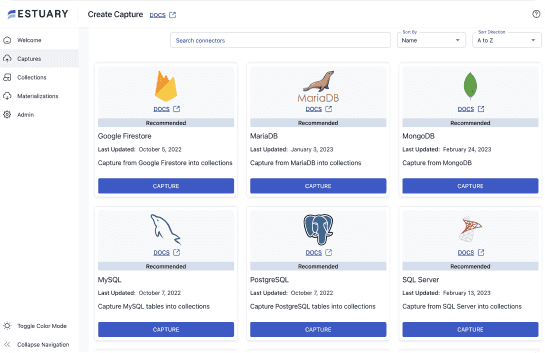

At Estuary, our platform is built around the mission to democratize access to real-time data. The reason we’ve chosen this mission is because we’re confident that the industry is on the cusp of a paradigm shift toward real-time.

Having a real-time data backbone is the solution to a host of infrastructural problems. Technologically, it’s absolutely possible, but you won’t see it start to take over until it becomes more convenient than older alternatives.

Fortunately, as an industry, we are on the cusp.